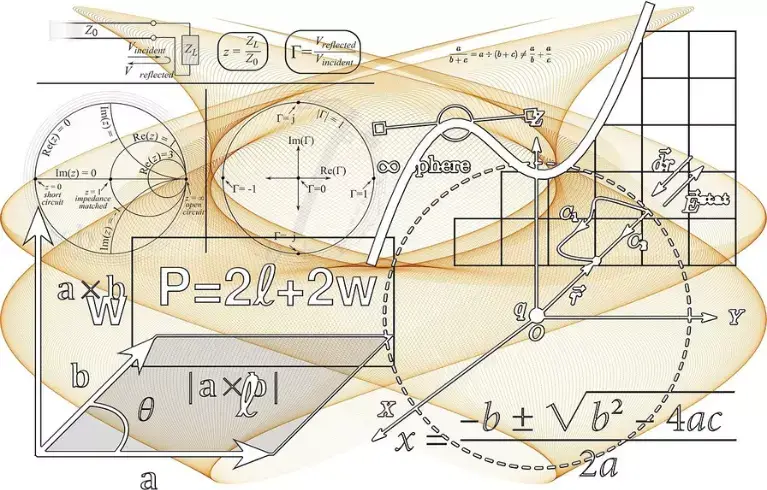

Continuous functions of one variable

We discuss the important class of continuous functions of one variable. We teach you how to prove that a function is continuous. We also provide several examples and exercises with detailed answers to illustrate our course. To fully understand the content of this page, some tools on the limit of functions are necessary. Generalities on … Read more